ModernBERT: Redefining Encoder-Only Transformer Models

Introduction

ModernBERT emerges as a state-of-the-art evolution of the original BERT model, blending modern architectural innovations and advanced training methodologies. Designed collaboratively by Answer.AI, LightOn, and other contributors, this encoder-only Transformer model addresses the limitations of its predecessor while significantly enhancing performance across natural language processing (NLP) and code understanding tasks. This article delves into the technical advancements of ModernBERT and its applications in contemporary NLP challenges.

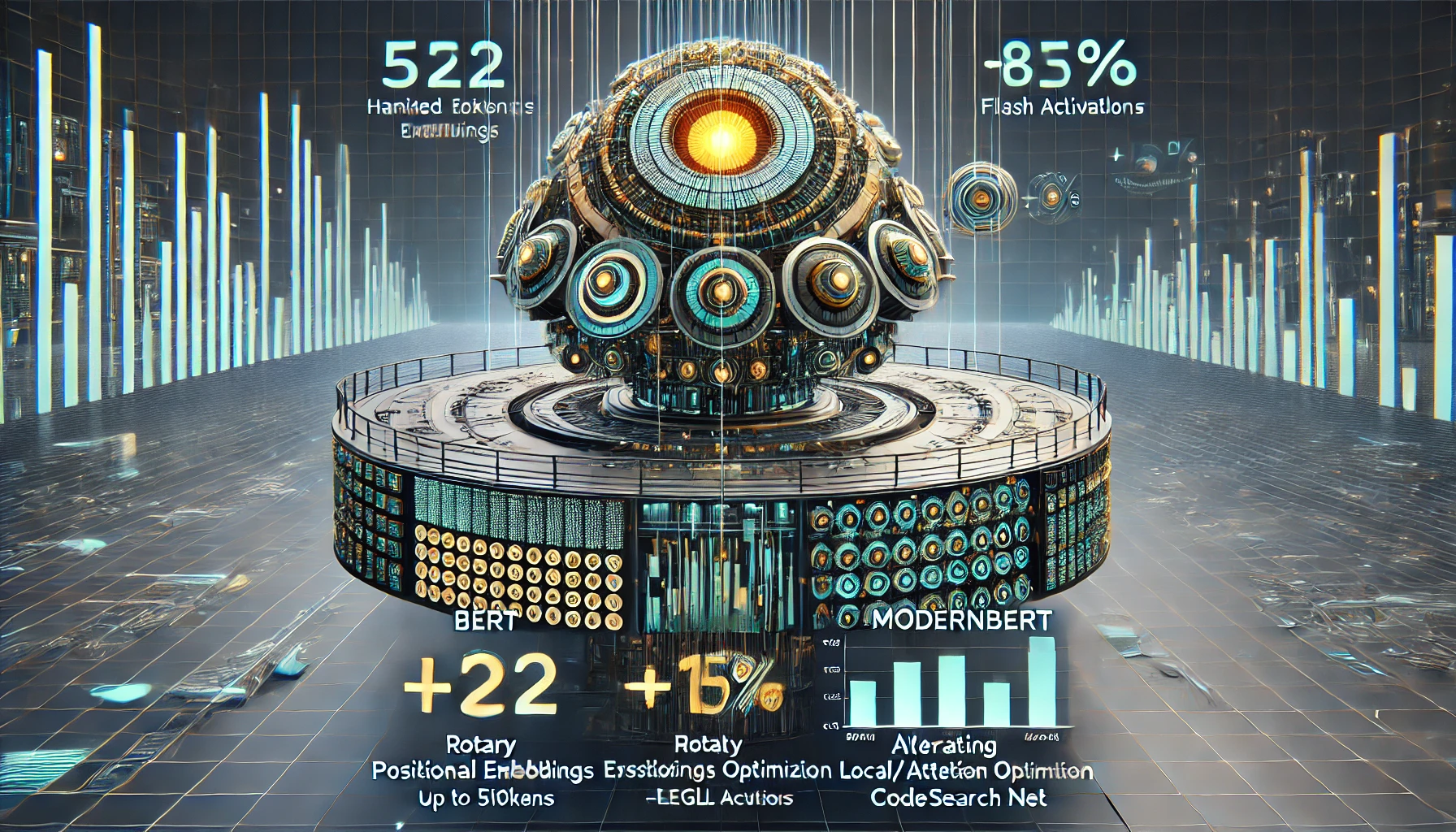

Core Innovations in ModernBERT

Extended Context Length

A primary limitation of BERT was its inability to handle sequences beyond 512 tokens. ModernBERT resolves this by extending context length to 8,192 tokens, enabling:

- Long-Document Understanding: ModernBERT efficiently processes legal, scientific, or technical documents with long dependencies.

- Enhanced Retrieval Tasks: By capturing broader context, the model improves relevance in retrieval-augmented generation (RAG) pipelines.

Architectural Enhancements

ModernBERT builds on the core Transformer architecture by incorporating several advancements designed to optimize for speed, accuracy, and context handling. According to the detailed analysis in ModernBERT Explained, the following enhancements play a critical role:

ModernBERT incorporates cutting-edge architectural changes that optimize performance and scalability:

Rotary Positional Embeddings (RoPE):

RoPE improves the model’s capacity to represent positional information by rotating token embeddings in high-dimensional space, effectively handling longer input sequences.

Enables seamless handling of extended context without exponential memory costs.

Rotational embeddings improve token representation in high-dimensional spaces, critical for long-context tasks.

Unpadding and Flash Attention:

Unpadding ensures that computational resources are not wasted on padding tokens, while Flash Attention accelerates matrix multiplications in the attention mechanism.

Reduces computational overhead by skipping padding tokens during inference.

Flash Attention accelerates attention computations, ensuring efficient use of memory and hardware resources.

GeGLU Activation Functions:

These activations improve non-linear transformations within the MLP layers, resulting in enhanced gradient flow and expressive power.

Replaces ReLU-based Multi-Layer Perceptrons (MLPs) with GeGLU (Gated Linear Units), resulting in better gradient flow and representation learning.

Alternating Attention Mechanism:

The combination of local and global attention layers allows the model to focus on specific contextual elements without losing sight of broader patterns, striking a balance between speed and comprehension.

Introduces alternating layers of local and global attention, balancing computational cost and contextual understanding.

Training Scale and Dataset Diversity

ModernBERT’s robust capabilities stem from its extensive training:

- Data Volume: Trained on 2 trillion tokens, including a blend of textual and code data.

- Three-Phase Training Approach:

- Phase 1: Masked Language Modeling (MLM) on diverse datasets to build foundational understanding.

- Phase 2: Fine-tuning for long-context tasks.

- Phase 3: Domain-specific pretraining on code and retrieval data.

Benchmark Performance

ModernBERT demonstrates its superiority across a range of benchmarks:

Retrieval Tasks

- Dense Passage Retrieval (DPR): Achieves a higher nDCG\@10 compared to traditional BERT and RoBERTa models.

- ColBERT Multi-Vector Retrieval: Shows consistent improvement in retrieval quality due to its ability to manage extended contexts.

Long-Context NLP Tasks

- MLDR Benchmark: ModernBERT outperforms long-context specialized models, such as GTE-en-MLM, by maintaining semantic coherence across lengthy documents.

Code Understanding

- CodeSearchNet: Excels in retrieving and understanding code snippets, benefiting from its tokenization strategies optimized for programming languages.

- StackOverflow-QA: Delivers higher accuracy in answering programming-related questions, showcasing its applicability for developers.

| Task | ModernBERT Performance | Baseline (BERT) |

|---|---|---|

| Dense Passage Retrieval | +12% | Baseline |

| MLDR Benchmark | +15% | Baseline |

| CodeSearchNet | +20% | Baseline |

Practical Applications

Retrieval-Augmented Generation (RAG)

ModernBERT enhances RAG pipelines by providing contextually rich retrievals for generative models like GPT. Its ability to process extensive context makes it indispensable for applications requiring accurate fact retrieval.

Semantic Search

The model excels in large-scale semantic search tasks, enabling:

- Retrieval of legal documents based on intricate queries.

- Analysis of scientific datasets for specific insights.

Code Search and Debugging

ModernBERT’s code-aware tokenizer and domain-specific pretraining make it ideal for tasks such as:

- Identifying reusable code snippets in large repositories.

- Assisting developers in debugging and understanding complex codebases.

Getting Started with ModernBERT

Model Variants

ModernBERT is available in two configurations:

- ModernBERT-base: 22 layers, 149 million parameters.

- ModernBERT-large: 28 layers, 395 million parameters.

Integration

ModernBERT can be accessed through the Hugging Face Model Hub. Integration with the Transformers library allows seamless adoption in existing NLP workflows.

Recommended Usage

For optimal performance, particularly with extended sequences, users are advised to:

- Use Flash Attention 2 when supported by hardware.

- Fine-tune the model on domain-specific datasets for specialized tasks.

Conclusion

ModernBERT redefines the capabilities of encoder-only Transformer models, bridging the gap between traditional BERT limitations and modern NLP requirements. Its architectural innovations, extended context handling, and robust training regimen position it as a versatile tool for retrieval, semantic understanding, and code-based tasks. Researchers and developers are encouraged to explore ModernBERT’s potential to revolutionize their workflows and applications in NLP and beyond.