DeepSeek Model: A New Frontier in Open-Source AI

Introduction

DeepSeek is a cutting-edge open-source large language model (LLM) designed to revolutionize natural language processing tasks. Developed by a leading Chinese AI lab, DeepSeek stands out for its impressive performance, scalability, and cost-effective training methods. Its latest version, DeepSeek V3, showcases significant advancements in architecture, parameter optimization, and task performance. This article explores its key features, utility, versions, performance benchmarks, and how it compares to other models.

Financial Impact of DeepSeek

The release of DeepSeek-R1 in January 2025 had a profound effect on global financial markets, particularly among major technology firms. DeepSeek’s cost-effective and high-performance AI models challenged the dominance of established tech giants in the AI industry, triggering significant financial repercussions.

Market Disruptions

- Nvidia, a leading AI hardware supplier, experienced a historic market value loss of approximately $589 billion, marking one of the largest declines in U.S. stock market history.

- Microsoft, Alphabet (Google’s parent company), and Broadcom also faced substantial stock declines, contributing to a global sell-off.

- Overall, these losses erased nearly $1 trillion in market capitalization from AI and computer hardware companies.

Industry leaders responded swiftly to this disruption. Sam Altman, CEO of OpenAI, acknowledged DeepSeek’s performance as “impressive” and emphasized the increasing demand for computational resources. U.S. President Donald Trump also highlighted the need for maintaining competitiveness in the American tech industry amidst these shifts.

These market movements underscore the disruptive potential of DeepSeek and its ability to reshape the AI landscape.

What is DeepSeek and Why is it Useful?

DeepSeek is an advanced LLM tailored to handle a wide range of natural language tasks, including reasoning, coding, and content generation. Unlike many proprietary models, DeepSeek is open-source, making it accessible for developers and enterprises worldwide. Its utility lies in:

- Versatility: DeepSeek excels in various tasks such as text summarization, coding assistance, and conversational AI.

- Cost-Effectiveness: Trained at a fraction of the cost of proprietary models, DeepSeek democratizes access to high-performance AI.

- Scalability: With support for large-scale deployments, DeepSeek is suitable for both individual developers and enterprise applications.

Versions and Improvements

DeepSeek V2.5

Released in December 2024, DeepSeek V2.5 introduced significant enhancements:

- Improved Mathematical Reasoning: Boosted performance on the MATH-500 benchmark from 74.8% to 82.8%.

- Enhanced Coding Accuracy: Tailored improvements for software development tasks.

- Better Writing Capabilities: Refined natural language generation for more coherent outputs.

DeepSeek V3

DeepSeek V3 represents a transformative leap in LLM technology:

- Parameter Expansion: Features 671 billion parameters, with 37 billion activated per token using a Mixture-of-Experts (MoE) architecture.

- Training Dataset: Trained on 14.8 trillion tokens, offering unmatched diversity and depth.

- Efficiency: Maintains state-of-the-art performance while optimizing training costs.

Key advancements in V3 include:

- Mixture-of-Experts Architecture: Activates only relevant parameters for each token, reducing computational overhead.

- Scalability: Enables seamless handling of complex, large-scale tasks.

DeepSeek-R1: Pioneering Advanced Reasoning

Released in January 2025, DeepSeek-R1 is designed to excel in complex reasoning tasks, including mathematics and coding. Notably, it achieves performance comparable to OpenAI’s o1 model across various benchmarks.

Key Features:

Reinforcement Learning (RL) Optimization: DeepSeek-R1 was trained using large-scale reinforcement learning without relying on supervised fine-tuning. This approach incentivized the model to develop advanced reasoning behaviors, such as self-verification and reflection.

Open-Source Accessibility: Aligning with DeepSeek’s commitment to open-source development, R1 is available under the MIT license, allowing developers to freely access and utilize the model’s weights and outputs.

Distilled Variants: To support a wide range of applications, DeepSeek has released distilled versions of R1, including models based on Llama and Qwen architectures. These distilled models offer efficient performance suitable for various deployment scenarios.

What Makes DeepSeek Stand Out?

DeepSeek offers several unique features that differentiate it from other models:

Open-Source Accessibility

- DeepSeek is fully open-source, providing developers with the freedom to modify, deploy, and optimize the model according to their needs.

Mixture-of-Experts (MoE) Architecture

- Unlike traditional dense models, DeepSeek’s MoE architecture activates only relevant parameters, making it more efficient while maintaining high performance.

Cost-Effective Training

- Trained at a fraction of the cost of proprietary models like GPT-4o and Claude 3.5 Sonnet, DeepSeek offers competitive performance without the hefty price tag.

Domain-Specific Fine-Tuning

- Tailored for tasks such as coding, reasoning, and text analysis, DeepSeek excels in specialized applications.

Advanced Reasoning Capabilities (DeepSeek-R1)

- Introduces reinforcement learning-based reasoning, enabling complex problem-solving and self-reflection capabilities.

Token Efficiency

- Achieves a token generation speed of 90 tokens per second, ideal for real-time applications.

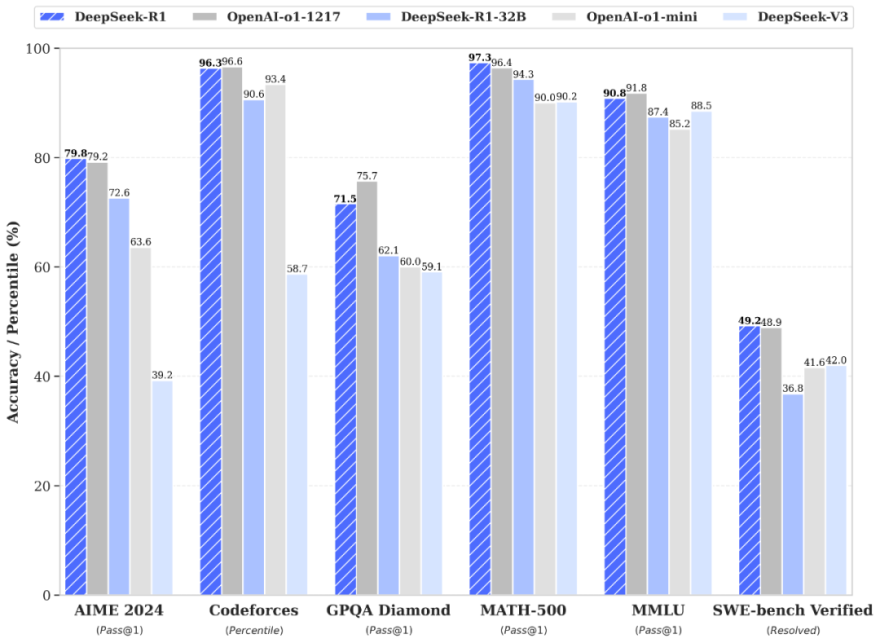

Performance Benchmarks

DeepSeek V3 and R1 deliver state-of-the-art performance across various benchmarks:

| Benchmark | DeepSeek-R1 | OpenAI-o1-1217 | DeepSeek-R1-32B | OpenAI-o1-mini | DeepSeek-V3 |

|---|---|---|---|---|---|

| AIME 2024 (Pass@1) | 79.8% | 72.6% | 79.2% | 63.6% | 39.2% |

| Codeforces | 96.3% | 93.4% | 90.6% | 58.7% | 39.2% |

| GPQA Diamond | 71.5% | 75.7% | 62.1% | 59.1% | 60.0% |

| MATH-500 (Pass@1) | 97.3% | 94.3% | 90.9% | 90.2% | 90.0% |

| MMLU (Pass@1) | 90.8% | 91.8% | 87.4% | 85.2% | 88.5% |

| SWE-bench Verified | 49.2% | 48.9% | 41.6% | 36.8% | 42.0% |

- MATH-500: Outperforms previous, excelling in mathematical reasoning.

- Reasoning Tasks: Matches or exceeds the performance of proprietary models in logical reasoning challenges.

- Code-Related Tasks:

- CodeSearchNet: Demonstrates high accuracy in code snippet retrieval and understanding.

- StackOverflow-QA: Scores consistently higher in answering technical programming questions.

- Speed: Generates tokens at 90 tokens per second, ensuring efficient real-time interactions.

Pricing Details

DeepSeek offers a flexible pricing model to accommodate various user needs, from individual developers to large enterprises:

Open-Source Access

- Free Tier: Developers can access the base model for free through GitHub, allowing for local deployment and experimentation.

API Pricing

DeepSeek’s API pricing is structured to be cost-effective, with rates varying based on the model and token usage:

| Model | Input Tokens (Cache Hit) | Input Tokens (Cache Miss) | Output Tokens |

|---|---|---|---|

| deepseek-chat | $0.014 per million tokens | $0.14 per million tokens | $0.28 per million tokens |

| deepseek-reasoner | $0.14 per million tokens | $0.55 per million tokens | $2.19 per million tokens |

These rates are significantly lower than those of proprietary models, making DeepSeek an attractive option for cost-conscious users.

For enterprise solutions, custom pricing and dedicated support are available to accommodate large-scale integrations.

Enterprise Plans

For large-scale integrations, DeepSeek offers tailored enterprise plans that provide:

- Priority Support: Dedicated assistance to ensure seamless integration and operation.

- Extended API Limits: Higher usage thresholds to accommodate extensive application needs.

For detailed information and to discuss specific requirements, interested parties should contact DeepSeek’s sales team directly.

Cost Comparison with Other Models

DeepSeek’s pricing is notably competitive when compared to other AI models:

Training Costs: DeepSeek developed its latest model for approximately $5.6 million, a fraction of the cost typically associated with large language models, which can run into billions of dollars

Inference Costs: With input token costs as low as $0.014 per million tokens for cache hits, DeepSeek’s inference costs are up to 90% lower than those of some competitors

This cost efficiency enables broader accessibility and scalability for various applications.include:

- Priority customer support

- Unlimited API access for high-demand applications

- Customizable SLA agreements and dedicated server options.

Real-World Applications

Software Development

DeepSeek excels in coding environments, providing developers with:

- Code Assistance: Auto-completion and bug detection for programming tasks.

- Documentation Generation: Generates accurate and concise documentation for codebases.

Customer Support

Enterprises use DeepSeek in customer-facing applications for:

- AI-Powered Chatbots: Delivering fast and accurate responses to customer queries.

- Sentiment Analysis: Understanding customer feedback to improve service quality.

Research and Education

DeepSeek is used in academic and research settings for:

- Document Summarization: Quickly condensing large volumes of research papers.

- Educational Platforms: Supporting adaptive learning through personalized AI-driven content.

Enterprise Data Management

Businesses leverage DeepSeek for:

- Real-Time Data Insights: Processing and analyzing large datasets for actionable insights.

- Predictive Analytics: Helping organizations forecast trends and make data-driven decisions.

How to Use DeepSeek

GitHub Repository

DeepSeek is available on GitHub, allowing developers to:

- Download the model for local deployment.

- Customize the architecture for domain-specific applications.

API Access

Enterprises can integrate DeepSeek through its API for seamless usage in:

- Chatbots

- Document summarization

- Real-time data processing

Deployment Steps

- Access the Model: Visit the official GitHub page to download the required files or sign up for API access.

- Set Up the Environment: Install dependencies such as PyTorch and Hugging Face Transformers.

- Fine-Tune the Model: Use your dataset to train DeepSeek for domain-specific tasks.

- Deploy: Host the model locally or on cloud platforms for scalable applications.

Conclusion

DeepSeek V3 is a game-changer in the world of open-source AI, combining state-of-the-art performance, cost-effectiveness, and scalability. With its Mixture-of-Experts architecture and extensive training dataset, DeepSeek offers a robust alternative to proprietary models. Whether for developers seeking customizable solutions or enterprises aiming to integrate advanced AI, DeepSeek provides the tools and flexibility needed to excel in a variety of applications. Explore its potential today to unlock the future of AI-driven innovation.