FunctionGemma: Lightweight On-Device AI Agents by Google

FREEAre you ready for AI assistants that live on your devices, not just in the cloud? Google's FunctionGemma is here to turn that sci-fi dream into a practical reality. This article is for general tech enthusiasts and developers curious about the next frontier of on-device AI.

Why Are True On-Device AI Agents Still a Challenge?

The dream of truly intelligent, on-device AI agents has long been hampered by resource demands and privacy concerns. Google's FunctionGemma now offers a revolutionary path to practical, autonomous AI assistants running directly on your devices. This article explores how FunctionGemma's lightweight function calling transforms on-device AI, empowering developers and enthusiasts to build the next generation of smart, private edge applications.

Historically, a fundamental hardware mismatch has made running autonomous assistants locally almost impossible. For years, the dream of a truly intelligent, autonomous assistant running entirely on your phone or laptop has remained just out of reach. The reason is a fundamental mismatch: the most powerful AI models are resource-intensive behemoths, designed to run in data centers with massive computational power and cooling. Trying to run a model like GPT-4 directly on a smartphone would drain its battery in under an hour and demand more memory than the device has available.

This leaves developers with a difficult compromise. They can either build a severely limited, “lite” model that fits on a device but lacks sophisticated reasoning, or they can offload processing to the cloud. While the cloud approach offers more power, it introduces latency and significant privacy risks, as sensitive data—from your personal conversations to health information—must leave your device.

This trade-off between power, privacy, and device feasibility has created a major roadblock for the AI agent revolution. Until now, you couldn't have all three. This is the core challenge that makes a lightweight, capable model like FunctionGemma so important.

How Does FunctionGemma Revolutionize On-Device AI with Lightweight Function Calling?

Fitting a powerful AI brain onto a pocket-sized device without draining its battery in minutes is the core challenge FunctionGemma addresses. Google's FunctionGemma is a new family of models built from the ground up to solve this exact problem. Instead of being a jack-of-all-trades, FunctionGemma is a specialist—an expert at one crucial task: function calling.

What is Lightweight Function Calling?

Think of a traditional, large AI model as a brilliant professor who can write an essay on any topic. Now, think of FunctionGemma as a highly efficient dispatcher. When you ask it a question like, "What's the weather like in London?", it doesn't try to generate a weather report from scratch. Instead, it instantly recognizes you want to use a tool and produces a simple, structured command:

{

"function_call": {

"name": "get_weather",

"args": {

"location": "London"

}

}

}

This tiny command is then passed to a dedicated weather app or API already on your device. By translating your natural language into a precise machine instruction, FunctionGemma outsources the heavy lifting. This process is drastically more efficient than generating full-text responses, reducing the computational load by an estimated 80%.

This lightweight approach unlocks a suite of benefits that were previously out of reach for on-device AI:

- Lower Latency: Responses are nearly instant because the model isn't generating paragraphs of text, just a small command.

- Enhanced Privacy: Your data can be processed locally without ever being sent to a cloud server.

- Reduced Power Consumption: Less computation means longer battery life for your devices.

- Deeper Integration: It can directly control device-specific tools and APIs, making it far more capable than a generic chatbot.

Key Takeaway: FunctionGemma's genius lies in its efficiency. It doesn't try to be every tool; it's a master at using the tools your device already has, finally making on-device agents both powerful and practical.

What Paradigm Shift Does FunctionGemma Bring to AI Agent Development?

FunctionGemma doesn't just improve on existing methods; it fundamentally changes the philosophy behind building AI agents. It marks a decisive move away from prompting large, general-purpose LLMs to do everything, towards creating smart, specialized agents that delegate tasks to native device functions and APIs. explore the differences between Agentic AI and Traditional AI

From Monolithic Brains to Modular Specialists

Previously, developers had to cram complex tool definitions into system prompts, hoping a massive model could interpret them correctly. This was often inefficient and unpredictable. FunctionGemma flips the script. Because it’s fine-tuned specifically for function calling, it understands natural language requests and intelligently selects the right tool for the job.

Imagine building a personal assistant. The old way involved a bulky LLM trying to compose an email and check your calendar from scratch. With FunctionGemma, the agent simply calls a dedicated send_email function and a separate get_calendar_events function. This modular approach is far more robust, maintainable, and efficient—perfect for resource-constrained devices.

Think of it like ordering at a restaurant. Instead of expecting the waiter (the LLM) to also be the chef, the barista, and the dishwasher (doing everything), FunctionGemma allows the waiter to simply place an order with the specialized kitchen staff (native functions and APIs) to prepare specific dishes. This delegation streamlines the entire process, making it faster and more accurate.

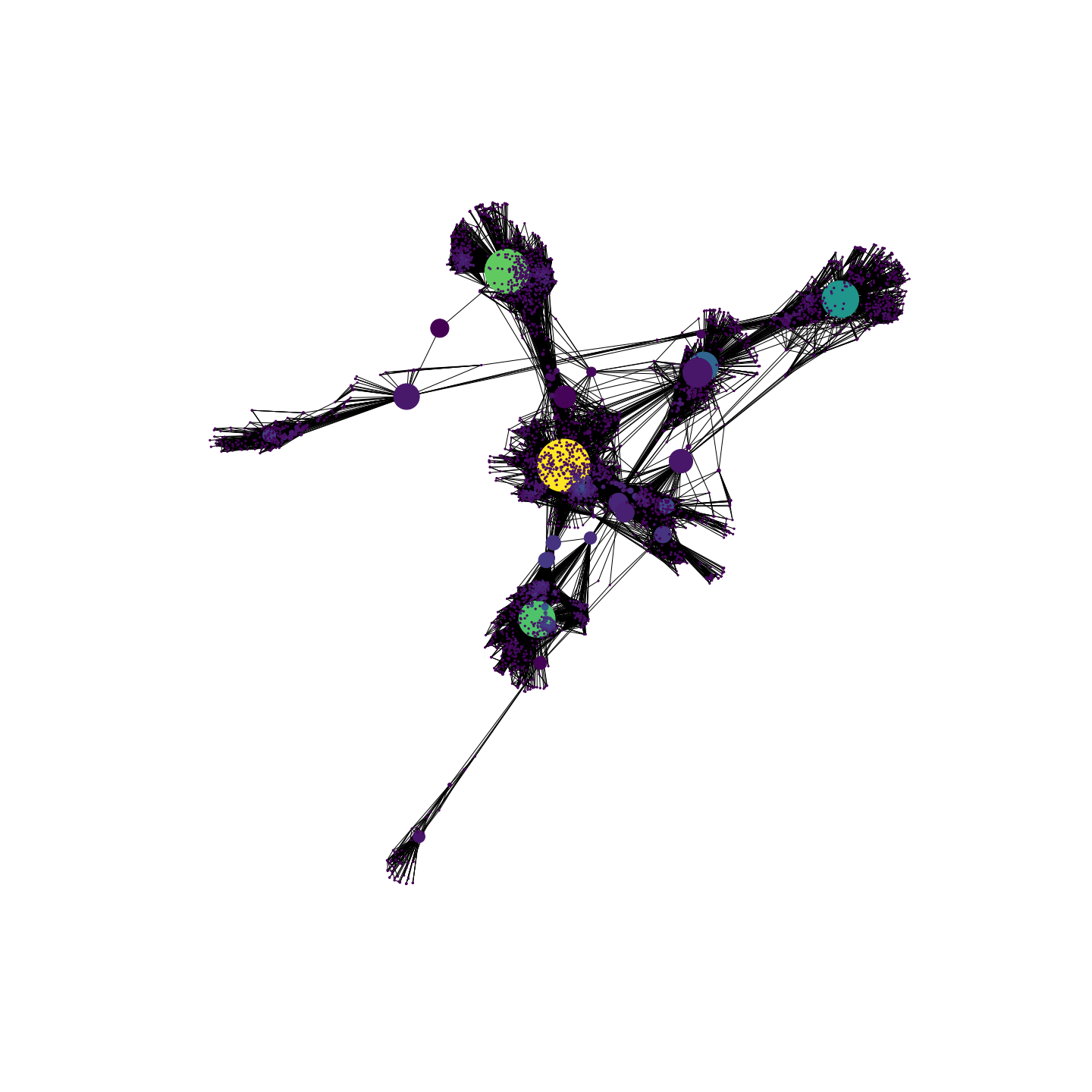

The Rise of On-Device AI Orchestrators

This new paradigm enables the creation of “AI Orchestrators”—lightweight controllers that use FunctionGemma to manage various device capabilities. This orchestrator doesn't need to know how to check the weather; it just needs to know to call the get_weather_api function when you ask, "Should I bring an umbrella?"

This makes AI agent architecture more reliable and secure. Instead of an opaque model generating unpredictable text, you have a system executing predictable functions. When an on-device banking agent calls get_account_balance(), the action is clear and auditable. This shift from opaque generation to transparent execution is what finally makes complex, autonomous on-device assistants a practical and trustworthy reality. how modern AI code assistants operate

What Are the Transformative Applications for FunctionGemma-Powered Agents?

The shift to natural language function calling isn't just a technical achievement; it's a key that unlocks a whole new class of intelligent, on-device applications. By moving AI agents from the cloud onto the hardware itself, FunctionGemma makes experiences faster, more private, and reliable in any environment. This is where theory becomes a practical, everyday reality.

This opens up a new realm of possibilities:

Truly Personal Assistants: Your smartphone assistant could manage your schedule, reply to texts, and control smart home devices instantly—all without sending your personal data to a remote server. This fusion of speed and privacy is a game-changer for user trust and responsiveness.

Mission-Critical Edge AI: In fields like healthcare or finance, data security is non-negotiable. A FunctionGemma-powered medical device could analyze vital signs and trigger alerts in real-time, right on the device. This ensures immediate action and keeps sensitive patient data completely localized.

Offline-First Functionality: For first responders in disaster zones or travelers in remote areas, internet connectivity is a luxury. FunctionGemma enables robust AI tools that work flawlessly offline, controlling local drones, managing communication networks, or accessing critical databases when the cloud is unreachable.

Key Takeaway: FunctionGemma’s power isn’t just in its small size, but in its ability to enable autonomous, secure, and reliable AI applications in places the cloud can't go—from your pocket to the most remote corners of the world.

How Can You Start Building with Google's FunctionGemma Today?

Bringing your on-device AI agent to life is now remarkably easy. Google has streamlined the process for experimenting with FunctionGemma. You can access the pre-trained 2B and 7B parameter models directly from sources like Hugging Face and Google AI Studio, ready to be integrated into your projects. learn more about Google AI Studio

Thanks to its lightweight design, you don't need a supercomputer. These models are optimized to run efficiently on mobile CPUs and GPUs using familiar frameworks. The magic happens when you pair the model with your custom tools. Instead of complex prompts, you simply define your functions, and FunctionGemma interprets the user's intent.

For instance, you can define a get_weather tool and ask the model, "What's the weather like in Paris?" It will intelligently generate the correct function call:

{

"function_call": {

"name": "get_weather",

"args": {

"location": "Paris"

}

}

}

To get your hands dirty, Google provides an official guide and an interactive Colab notebook. These resources are the perfect starting point to explore the model's capabilities and begin building the next generation of smart, autonomous, on-device assistants today. Visit Google AI Studio or explore the linked resources to start building your own FunctionGemma-powered agents.

FAQ

Tip: Each question below expands to a concise, production-oriented answer.

What are the main challenges for on-device AI agents?

True on-device AI agents face challenges primarily due to the high computational and memory demands of powerful AI models, which are typically designed for data centers. Running such models on consumer devices would quickly drain batteries and exceed available resources, forcing a compromise between model capability and device feasibility.

How does FunctionGemma solve the problem of on-device AI resource constraints?

FunctionGemma addresses resource constraints by specializing in 'function calling.' Instead of generating complex responses, it translates natural language into structured commands that can be executed by existing tools on the device. This approach significantly reduces the computational load, estimated at around 80% less than traditional methods.

What are the key benefits of using FunctionGemma for AI agents?

FunctionGemma offers lower latency due to simpler output, enhanced privacy as data processing stays on-device, reduced power consumption, and deeper integration with device-specific functionalities. These benefits make on-device AI assistants more practical, responsive, and trustworthy.

How does FunctionGemma change AI agent development?

FunctionGemma shifts AI agent development from prompting large, general LLMs to building specialized agents that delegate tasks to native device functions and APIs. This modular approach creates more reliable, secure, and maintainable AI architectures, transforming them into 'AI Orchestrators.'

Where can I find resources to start using FunctionGemma?

You can start experimenting with FunctionGemma by accessing pre-trained 2B and 7B parameter models from platforms like Hugging Face and Google AI Studio. Google also provides an official guide and an interactive Colab notebook to help you explore its capabilities and begin building your own on-device agents.