DeepSeek-V3.2: Gold-Medal AI & Efficient Daily Driver LLM

The era of the “one-size-fits-all” large language model is over. DeepSeek’s latest dual release makes that impossible to ignore. With DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, you no longer pick one model and force it to do everything—you design a portfolio of models, each tuned for a different job.

This article uses DeepSeek’s new family as a concrete case study for developers, tech leads, and AI architects:

- Why monolithic LLMs are breaking down.

- When to use V3.2 as a cheap, powerful daily driver.

- When to bring in V3.2-Speciale as a gold-medal reasoning engine.

- How this dual-model strategy will reshape AI stacks and your role as a builder.

Why is the "One-Size-Fits-All" LLM Approach Breaking Down?

For years, the dominant strategy was simple: build one giant model and throw everything at it—chat, search, code, math, agents, you name it. That approach is now collapsing under its own weight.

In practice, a single “do-everything” LLM creates a constant trade-off:

- Use a frontier model for everything → you overpay for trivial work like FAQ answers or basic summaries.

- Use a cheaper lightweight model for everything → it cracks under hard reasoning: Olympiad-style math, complex coding tasks, multi-step planning.

This isn’t only a pricing problem; it’s a structural conflict in how models are optimized:

A general-purpose, low-latency chat model is tuned for:

- Fast responses

- Stable tool calls

- Latency-sensitive UX (support, copilots, assistants)

A deep reasoning engine is tuned for:

- Long internal chains of thought

- Many tokens per query

- Maximize correctness, not speed

Trying to make one model excel at both turns into a lose-lose: either it’s too slow and expensive for daily tasks, or too shallow for high-stakes reasoning.

You can see this tension in how models are now benchmarked:

- AIME 2025 / IMO 2025 – elite math contests used as math-reasoning benchmarks.

- IOI / ICPC World Finals – programming Olympiads used to test algorithmic coding and structured reasoning.

Models that crush these benchmarks often do it by thinking longer and deeper—sometimes generating tens of thousands of tokens for a single problem. That’s fantastic for a math contest… and terrible for answering “What’s in this email?” or “Summarize this meeting.”

This is why we’re seeing a clear bifurcation:

- A “daily driver” model for 95–99% of tasks: chat, coding, agents, analysis.

- A “specialist” model that only wakes up for hard, high-value questions where a 20–30% accuracy boost pays for a 2–3× cost increase.

DeepSeek’s V3.2 family doesn’t just follow this trend—it makes it explicit, with two clearly defined roles in the same release.

What Does DeepSeek-V3.2 Offer as a "Daily Driver" for General Tech Needs?

DeepSeek-V3.2 is designed to be the foundation of your AI stack: the model you can call all day, for almost everything, without destroying your budget.

Frontier performance at “daily driver” cost

Public benchmarks place V3.2 squarely in frontier territory:

- Around 93.1% accuracy on AIME 2025, on par with GPT-5-level math reasoning.

- Strong results on coding agent tests like Terminal Bench 2.0 and SWE-Verified, competitive with top proprietary assistants.

Crucially, this comes with dramatically lower costs:

- Analyses report 10–25× lower cost than closed alternatives such as GPT-5 and Gemini-3.0-Pro, depending on provider and workload.

- For long contexts (e.g., 128K tokens), inference costs can drop by 50–70%, thanks to DeepSeek Sparse Attention (DSA).

In other words: frontier-class reasoning at mid-tier prices.

DeepSeek Sparse Attention (DSA): the efficiency engine

V3.2 builds on the experimental V3.2-Exp model, where DeepSeek first rolled out DeepSeek Sparse Attention (DSA).

DSA does two key things:

- Indexes the context with a “lightning indexer” to determine which tokens really matter.

- Selects a sparse subset of tokens for full attention, skipping the irrelevant parts.

Result:

- Much cheaper long-context inference, with little to no loss in quality.

- Makes 128K-token sequences viable without needing hyperscale budgets.

For developers, that means you can:

- Feed large codebases or long documents without panicking about cost.

- Use the same model for chat + retrieval + tool use, instead of splitting workloads across three different “tiers” just to manage your bill.

Thinking while using tools: why agents love V3.2

Earlier generations of models had a nasty habit: every time they called an external tool, they would lose their reasoning thread. The context window filled up with tool I/O, and the model “forgot” why it started in the first place.

V3.2’s big practical innovation is “thinking in tool-use”:

- It can preserve a coherent reasoning chain over multiple tool calls.

- That’s crucial for agent workflows: coding agents, research assistants, customer-support orchestrators, etc.

This makes V3.2 an excellent default choice when you build:

- Multistep coding agents

- RAG pipelines with retrieval + post-processing

- Analytics assistants that hit SQL, dashboards, and BI tools

- Ops copilots that orchestrate APIs, tickets, and documentation

Key takeaway: DeepSeek-V3.2 is a frontier-level generalist—strong enough for serious coding, data analysis, and complex chat, yet cheap and efficient enough to run as your default model across most of your stack. If you only choose one DeepSeek model for day-to-day use, it’s this one.

For a broader view of how V3.2 compares with OpenAI’s latest models and other frontier options, see our midway comparison of OpenAI o3-mini vs. DeepSeek R1, and our expert guide to Mixture-of-Experts scaling efficiency.

How Does DeepSeek-V3.2-Speciale Achieve Gold-Medal AI Performance?

If V3.2 is your electric daily driver, then V3.2-Speciale is a Formula 1 car: useless for grocery runs, unbeatable on race day.

DeepSeek explicitly positions Speciale as a maxed-out reasoning model, and its results back that up:

Around 96.0% on AIME 2025, topping GPT-5-High on this benchmark.

Gold-medal performance in:

- IMO 2025 (International Mathematical Olympiad)

- CMO 2025 (Chinese Mathematical Olympiad)

- ICPC World Finals 2025

- IOI 2025 (International Olympiad in Informatics)

These are real competitions, not synthetic benchmarks. The message is clear: Speciale is built to solve the hardest math and algorithmic problems humans care about.

What makes Speciale different under the hood?

Compared to the base V3.2:

- Speciale leans heavily on prior math-optimized work (e.g., DeepSeek-Math-V2) and extends it.

- Its reinforcement learning phase is focused almost entirely on reasoning data—not casual chat or open-ended conversation.

- It runs with much longer internal reasoning traces, often generating 2–3× more tokens per complex problem than competitors like GPT-5 or Gemini-3.0-Pro.

In practical terms, Speciale is optimized for:

- Multi-step, symbolic math

- Olympiad-style algorithmic coding

- Formal reasoning (proofs, verification, logic)

It’s less interested in being fun, chatty, or super fast. It’s optimized to be right.

The cost of gold-medal reasoning

Of course, that performance comes with serious trade-offs:

- Token usage explodes on hard tasks. Several analyses report Speciale using tens of thousands of tokens per problem, easily surpassing 64k outputs in some setups.

- That means 2–3× higher cost per hard query compared to V3.2 for the same task.

- It can be significantly slower end-to-end because it “thinks longer” before answering.

- At launch, Speciale is API-only and no-tools to ensure clean, comparable evaluation of its raw reasoning capabilities.

In one of my recent projects, we simulated this bifurcation:

- Using V3.2 everywhere gave us solid results at predictable cost.

- Swapping in Speciale only for a quantitative optimization module—where a single subtle error would invalidate the whole output—boosted our solve rate by roughly 30%, but increased compute for that module by around 250%.

That’s the core story of Speciale: dramatic gains, at a dramatic price, if and only if the problem truly demands it.

Key takeaway: DeepSeek-V3.2-Speciale is not a strictly “better” model. It’s a different tool, tuned for competition-grade reasoning, where each extra point on the scoreboard is worth real money.

For context on how these results are evaluated and compared, see our expert guide on the LMArena AI benchmarking platform.

What are the Strategic Trade-offs Between DeepSeek's Dual Models?

The real question is not “Which model is better?” but “Which model is right for this job?”

DeepSeek’s release makes that choice explicit:

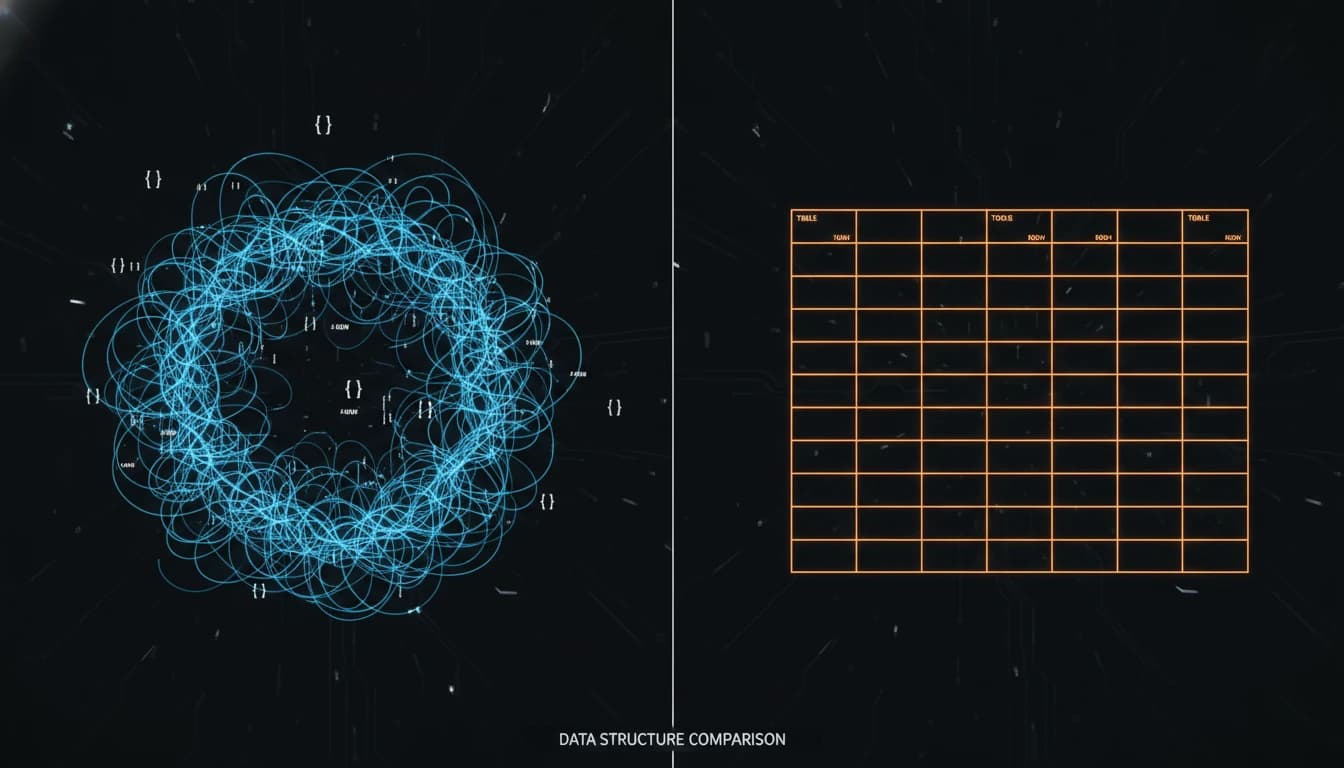

| Feature | DeepSeek-V3.2 ("The Daily Driver") | DeepSeek-V3.2-Speciale ("The Specialist") |

|---|---|---|

| Best For | General workloads: chat, coding, RAG, agents | High-stakes reasoning, Olympiad-style math & coding |

| Performance | Frontier-level, ~93.1% on AIME 2025 | Gold-medal level, ~96.0% on AIME 2025 |

| Cost | Cost-effective, efficient token usage | 2–3× more tokens on hard tasks, higher compute |

| Latency | Optimized for low-latency interactions | Slower: longer reasoning traces per query |

| Access | App, Web & API (depending on provider) | API-only, no tools at launch |

| Tool Use | Designed for agents & multi-tool workflows | Focused on pure reasoning (no tools initially) |

Benchmarks from public reports and independent analyses; exact numbers vary across providers and configs.

A simple decision checklist for builders

Use DeepSeek-V3.2 when:

You’re building:

- Chatbots, copilots, or productivity assistants

- RAG systems (documentation, knowledge bases, internal search)

- Coding assistants or lightweight code review

You care about:

- Latency (UX should feel snappy)

- Cost per request across thousands or millions of calls

- Tool orchestration (APIs, databases, search, dashboards)

Use V3.2-Speciale when:

The question is rare but very important:

- Competition-level math/coding (AIME, IMO, IOI, ICPC-style problems)

- Quantitative research, formal verification, or mission-critical algorithms

- Cases where a 20–30% boost in solve rate is worth a 2–3× cost jump.

You can tolerate:

- Higher latency (batch/offline jobs, overnight runs)

- Spiky token usage for a small subset of “elite” tasks.

A concrete example of dual-model architecture

Imagine a tech company deploying an internal engineering assistant:

90–95% of requests:

- “Explain this error message.”

- “Write a test for this function.”

- “Summarize the design doc.”

→ Route to V3.2 for fast, cheap, high-quality answers.

5–10% of requests:

- “Design a new algorithm for this scheduling problem.”

- “Prove this property about our risk model.”

- “Solve this Olympiad-style DP problem and explain the proof.”

→ Route to Speciale and accept the cost because each answer might save days of manual work.

Key Takeaway: The smart move is not to “standardize on one model,” but to standardize on a routing strategy that picks the right DeepSeek variant (or other LLM) per task.

For a deeper background on the original DeepSeek V3 architecture and open-source roadmap, you can explore the foundational V3 model

How Will DeepSeek's Bifurcation Strategy Reshape the Future of AI?

DeepSeek’s V3.2 family is more than “just another model launch.” It’s a public commitment to a future where:

- You don’t ask “Which LLM should we use?”

- You ask “Which combination of models should we orchestrate, and when?”

This shift has a few big consequences.

1. AI development moves from “pick a model” to “design a portfolio”

Instead of one monolithic LLM, you’ll increasingly see stacks like:

- One or two daily drivers (e.g., V3.2, GPT-5-Mini…)

- One or two reasoning specialists (e.g., V3.2-Speciale, o3-High…)

- A set of niche models for speech, vision, retrieval, code-search, etc.

Your job as a builder becomes:

- Mapping workload types → best model

- Quantifying the ROI of better reasoning vs. higher cost/latency

- Implementing routing, caching, and fallback logic across them

This mirrors how high-performing teams already treat cloud infra: you don’t use the same instance type for everything; you mix and match.

2. New roles: AI Architects and LLM Portfolio Managers

As model choices multiply, we’ll see more teams formally owning LLM strategy:

- AI Architects – design the end-to-end architecture of models, tools, retrieval, and observability.

- LLM Portfolio Managers – own cost, performance, and benchmarking; decide when to introduce or retire models.

Their job is not to be “prompt wizards,” but to:

- Benchmark models on real internal workloads (not just public leaderboards).

- Decide where a Speciale-class model is justified.

- Continuously tune routing policies as new models are released.

3. Benchmarks become more practical and multi-dimensional

Models like V3.2 and Speciale perform extremely well on math and programming Olympiads, but companies care about:

- End-to-end success rates on their own pipelines

- Total cost of ownership (tokens, infra, engineering time)

- Latency and UX for real users

Platforms like LMArena and community benchmark suites are already expanding beyond single scores to task-specific dashboards.

Expect more teams to run internal LMArena-style evaluations before swapping or adding models.

The Bottom Line: DeepSeek’s V3.2 release makes one thing very clear: the future of AI isn’t about finding the most powerful model, but building the smartest, most cost-aware portfolio of models—and orchestrating them well.

If you’re designing an AI stack today, your next steps are not:

- “Should we switch everything to V3.2-Speciale?”

But rather:

- Which 5–10% of our workloads deserve a Speciale-class engine?

- How do we route everything else to a cheaper daily driver like V3.2 without sacrificing quality?

On stAItuned we’re building a full “AI Architect” series on:

- Multi-model routing patterns

- Cost optimization with frontier models

- Benchmarking strategies for real-world workloads

References

- DeepSeek-V3.2 Release: Gold-Medal Performance and Thinking in Tool-Use (DeepSeek Official News) - https://api-docs.deepseek.com/news/news251201

- Introducing DeepSeek-V3.2-Exp: Experimental Sparse Attention Model (DeepSeek Official News) - https://api-docs.deepseek.com/news/news250929

- DeepSeek-V3.2 Technical Tour: Architecture and Reasoning Enhancements (Sebastian Raschka's Magazine) - https://magazine.sebastianraschka.com/p/technical-deepseek

- DeepSeek-V3.2: Open Source AI Matches GPT-5 and Gemini 3 at Lower Cost (Introl Blog) - https://introl.com/blog/deepseek-v3-2-open-source-ai-cost-advantage

- DeepSeek V3.2 Matches Gemini-3.0-Pro Performance While Cutting Costs by 70% (Aakash G News) - https://www.news.aakashg.com/p/deepseek-v32